Prologue

This may be the first in a series of posts and articles on the various container and virtualization methodologies available in the Linux ecosystem. The plan is to start with the ones that are easiest to understand, then move to the more complex. Of course this is from my perspective of the complexity.

As with anything Linux, there are multiple ways to accomplish things. The same is true for containers and virtualization. My intent is to present various concepts in as simple and non technical way as possible.

Initially I wanted to cover these as two subjects separately. But then I had an epiphany. Basically the two subjects are so intertwined as to be one subject. When one discusses the subject of either containers or virtualization in the Linux ecosystem, the other always comes up.

One thing I already expect to hear objections about is my classifying Virtual Machines (VM) as containers. Many people will say containers are alternatives to VM’s. It has been argued that a container is a isolated standalone environment including dependencies. However, I would argue that since you are setting a VM up to do something, they also meet this definition.

Regardless of your views, the fact is that in many instances containers and VMs are mentioned together, because they a different methods of accomplishing similar goals. Because of this I lean towards including them in this discussion regardless of how one classifies them.

I can also only speak to my direct experience, and I have not used every available technology that is out there. I have researched and worked with a few others in the past, but only briefly. I therefore have limited first hand experience and knowledge that can only come from actual long term usage.

That being said, I also want to to be clear that I am making no recommendations or promoting any specific solution. They all have advantages and disadvantages. Your situation will be different than mine, and what fits my needs will most likely not fit your needs.

As an example, I am using Linux as the primary driver on my main desktop. I do have a Windows 11 install, but have not booted it in the last couple of months. And I have not used MAC since the late 1980’s. If one looks at the statistics, 95% of desktop users don’t use Linux. So what works for me in Linux may or may not work for you in whatever Operating System you are using.

My best advice is to understand what the various systems are and what they can do for you. Try several different ones, based on your needs. Then select the ones that works best for what you do.

Definitions

Before getting into specifics of various virtualization and containerization methods, we need to define some jargon and technical terms, as it is impossible to discuss these subjects without using some jargon. Consider this to be an introduction to both the concepts and terms used to describe virtualization and containerization.

- Hardware: This is all the physical components that make up your computer. Things you can actually touch and hold in your hand like the ; memory, storage drives, CPU, network adapter, graphics card, etc. This does not include software, because while you can hold a physical disk or CD, you can’t hold the information embedded on them.

- Host: This also refers to your computer, but more specifically to the operating system software running on it. The host is the only software that can directly access the hardware.

- Kernel: This is This is software on the host system that manages both hardware and software. The kernel can be either monolithic or distributed. There are also kernel modules that allow the kernel to manage specific hardware and software.

- Operating System (OS): The Operating System, includes a kernel and various other utilities that allow applications and users to interact with the system.

- Hypervisor: The hypervisor creates and manages virtual hardware, virtual networks, and virtual machines. There are two basic types of hypervisor. Type 1 hypervisors connect directly to the kernel and are the de facto operating system. Type 2 hypervisors connect to a operating system that connects to the kernel, adding an extra layer to the virtual emulation.

- Virtual Hardware: This is a software emulation of hardware created and maintained by the hypervisor, this become the basis of a virtual machines.

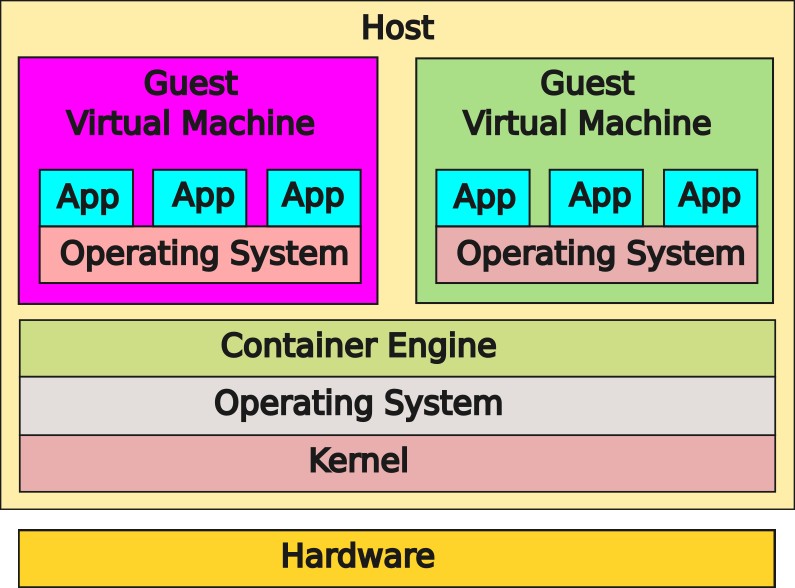

- Guest: The software based emulation of a operating system run on top of virtual hardware. While the guest runs on the host, it is a separate entity, its own container. Each guest Virtual machine has its own kernel and operating system.

- Application: Any program that runs on a operating system. This can be either the host or the guest operating system. In point of fact a type 2 hypervisor is a application running on the host operating system.

- Pass through: This simply means passing actual hardware directly to the Virtual machine instead of using virtual hardware. Some common examples are passing through a directory form the host so data can be shared. Passing though USB ports so the guest has access to the hosts USB drives. Passing though the GPU for Virtual Machines that need higher levels of graphical performance.

Virtual Machines (VM)

This is the simplest to understand. A Hypervisor simulates (virtual) hardware, then creates a VM on top of that Virtual Hardware. The Guest VM runs in isolation from the Host system and hardware, with some exceptions being deliberately added (Pass through of USB ports, Graphics cards, etc).

One of the side effects of this process is that hardware resources must be divided up between the Host and any running Guests. This also means that a Guest VM can also run pretty much any Operating System (OS), as it is isolated form the host. One could run a Windows Guest on a Linux Host or vise versa.

The image above is a Type 2 Hypervisor, as it is running with a seperate operating system. A Type 1 Hypervisor would run dirctly from the kernel without the need for a OS (the Type 1 Hyopervisor is the de facto OS). Generally Type 2 Hypervisors are considered slower slower than Type 1 Hyopervisors because of the additional complexity of a separate operating system.

The various Applciaiotns I ahve used to create VM’s are Oracles VirtualBox, Proxmos (which uses KVM), and the Lib-vert/Qemu/KVM stack.

VM’s vs Containers

While a Virtual machine may be a container, when we talk about containers specifically, they have a slightly different meaning. As a general principle a container is a partial virtualization. It is probably best to use a simple generic example to explain this.

Lets say you are running a Debian 12 as the operating system on a host. Lets say you want to run a web server and a gaming server, but want them to be isolated form your host. You decide to set up two guests (virtual machines), both of which will also be using Debian 12. When everything is up and running we will have three kernels (host and two guests) as well as three identical operating systems. Our hardware resources will need to be distributed between three systems. Hardware resources are also manged by the kernel, which means while you can assign maximum resource usage, the kernel will decide what to actually give a Guest based on its usage and demand.

These types of containers are created by application like Linux Containers (LXC), Podman, or Docker. While lighter to implement, they do rely on the guest being compatible with the host. For example one cannot run Windows on Linux using this type of system. Technically you can but it involves additional complecations to make it work.

This type of container can be taken a step further by removing the operating system from the guest and just running containerized application. Techncly bits and pieces of the an OS will be part of the container to meet dependency requirement. But this type of contaienr will strictly be running a specific application.

This type of contaier is setup by applications like Flatpak, Snap, and AppImage.

Epilogue

That’s our high level look at Containerization and Virtualization. My hope is that I have provided the average user has some idea about what is going on with this, as it is becoming increasingly more used, and not just in Linux.

I have endeavored for the most part to stay away form specifics. And I am aware that my presentation here may not fit perfectly with many implementations of containers and virtualization. But it was never the intended to provide specific information about any of the systems out there.

An example of where this technology is being used are immutable or atomic distributions. When I was testing Vanilla OS and Fedora Kenoite, large portions of then operating system were containerized. And instead of upgrading individual files, one just upgraded the entire container.

Virtualization and Containerization are good things, yes? Well, as one might guess there are issues that need to be addressed. However, while there is some commonality, the individual issues need to be evaluated for the specific Hypervisor/Contianer Engine being used.

Some common takeaways, if you want to run dissimilar OS’s, full vitalization is the best way to do it. Containers can provide similar services with better resource management, but have requirements for a similar OS between the Host and the Guest.